Aceso ML

Jul 2020 - Oct 2020

Let patients watch their own progress, on demand

This is a long read,skip ahead if you want.

As said before, there is a major problem with the way home exercise plans are carried out in physical therapy. What feels like an afterthought and is rarely adhered to, home exercise plans are essential to quick and full recovery after having surgery. We wanted to change this.

We set out to organize our library of ~300 Peloton style PT workouts you can do from home into progress based phases. We learned there are 2 essential indicators of muscle redevelopment after surgery:

- Strength

- Range of motion

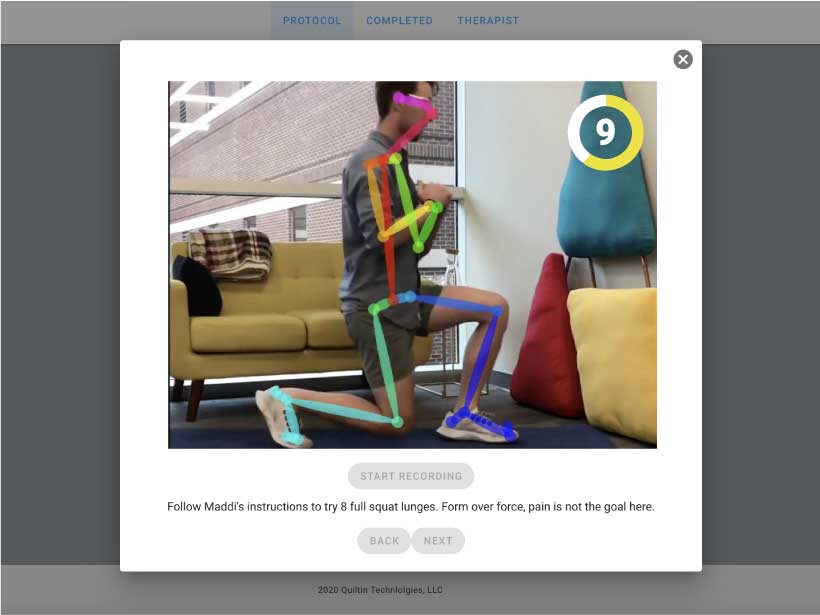

While strength would be difficult to create a computer-measurable KPI on, we found range of motion to be more accessible. With the help of human pose estimation computer vision models, we found a way to get X and Y keypoints of different joints and body parts, using a single webcam.

Human pose estimation requires serious GPU power to be time effective, so I went about learning how to use AWS's EC2 and Google Compute Engine's GPU VM instances. After nearly 2 weeks of toiling between different cloud providers and Linux distros, I decided Debian on GCE was the most approachable option for someone with minimal DevOps experience, beyond some intense Raspberry Pi projects in high school.

Now to decide on what model to use. I had a hunch that no open source pose estimation model would be trained well enough to perform our tasks in the real world, so I learned how to train Tensorflow YOLOv4 object detection models to look for an object that was impossible to miss: 3 bright green circles cut from card stock, glued to cardboard, with a safety pin sticking out from the back so I could attach it to my pants, in an attempt to measure flexion of the knee as a PoC.

I converted the TF model to TFJS, so I could run inferences in the browser, even in CodePen from my iPad.

As you can see the results were shaky, and the frame by frame data would be unusable.

So now I had a new mission. Find a pre trained model to avoid having to snap and label 50,000+ images of green circles in different places, and get some usuable data from the result. After many tests with different models, libraries, frameworks, and datasets, I found CMU's OpenPose.

I was happy with the results from the demo, and decided the data smoothing they'd implemented was worth more than the time it would take me to recreate it myself.

TL;DR

With a decent idea that this might just work, I spent about 2 months drinking Bang energy daily, with an air mattress in my office, sleeping only 30-45 mins a day, in a frantic effort to prepare a demo for a potential investor. Essentially I closed everything else out of my life, to focus and learn what it takes to queue jobs with Redis, install SSL and spin up a server from the OS up, configure firewall rules, draw on video frames with Python's OpenCV2, manage job statuses in a DB, record from the webcam in a Vue app, and respond to job status changes.

The combination of this new system with our video library, therapist dashboard, and patient's video client, allowed patients to complete appropriate physical therapy from home, take progression tests to view and track progress objectively, and await their PT or surgeon's approval before moving into more intensive workouts.

Our goals

- Excite patients to stick with their home exercise plan

- Give patients direct access to data showing their progress

- Clarify the roadmap to full recovery

What we built

Like the current Aceso, we needed 2 web apps. First a dashboard for therapists to manage their practice and patient's, and second a video player for patients to consume the video playlists as prescribed by their linked medical professionals. The major difference between Aceso ML and the current Aceso is the phase based system depending on results of progression tests.

- Guide patients and record tests from the browser using the UserMedia API

- Save test recordings in a NoSQL database and queue jobs on a Google Compute Engine Debian VM

- Run machine learning inferences to detect 25 keypoints on the human body

- Use detected keypoints and the Pythagorean theorem to calculate angle of flexion for each frame in the recorded test

- Notify linked therapists and surgeons of test results, to be verified as correct before allowing a patient to progress

This was our 3rd major iteration of Aceso, and we've made big machines, machine learning, and several native and web apps we've deprecated as we've learned along the way. We're still working on it.

Related